HOW TO IMPROVE GOOGLE RANKINGS IN 5 STEPS

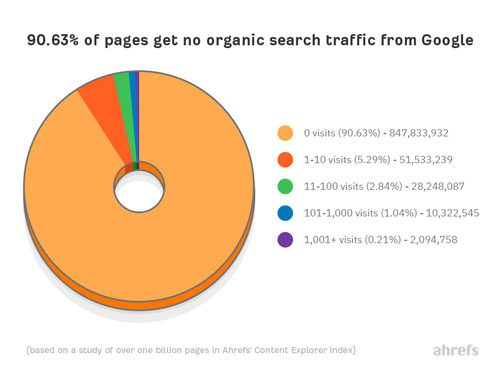

The search query “improving Google rankings†is one of the most commonly searched topics on the web, and for good reason. Did you know that over 90% of content on the web — that’s billions of pages — never gets indexed or ranked by Google at all? That’s an alarming statistic, one uncovered by SEO specialist company Ahrefs in a recently published study.

Think of all of the time — and money — you spend on copy for your website (not to mention page layout and coding). Are you wasting resources focusing on content that never gets indexed by Google, or seen by people?

In this post, I will focus on 5 things you can do to improve Google rankings now. While it’s true that getting on — and staying on — page 1 of Google search does take time (depending on the topic and keyword competitiveness) the 5 steps I’ll share in this post can have an immediate impact on SEO and improving Google rankings now.

How to Improve Google Rankings: Step 1 — Keyword Research

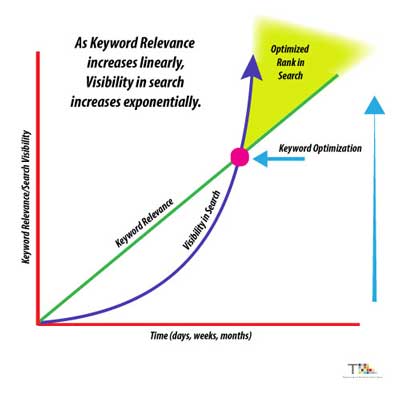

Keyword research is a critical step that is too often skipped when creating content for the web. If your pages aren’t ranking high in search, there’s a good chance it’s because you are not using the right relevant keywords.

A relevant keyword is one that, by search engine measures, has a high degree of association with the general topic or theme of the page or pages that it appears on.

When crawling URLs, Google will look at the global volume of search keyword queries it has indexed, find the keyword terms that are most popularly used by people, then rank the page or pages that are most closely associated with those high-volume keywords.

Keyword research is the process of finding pages that are already ranking high for keywords you want to rank for. Once you’ve done that, you can create your own version of that content by integrating those highly relevant keywords in your copy.

With this keyword research at hand, you can then set out to create pages that have a much higher chance of getting indexed by the search engines.

Think about keyword research from a new product development perspective. Would you ever introduce a product or service to the market without having done some research? Without knowing that there was some demand for that product or service? Probably not.

Keyword research follows the same principle. Why add website copy that no one is interested in, joining the ranks of the 90% of pages that never get indexed by Google in the process?

Keyword research helps you find highly successful content using similar topics or themes — content that is already ranking well. You can use these insights to create copy for your website that has a much-improved chance of getting seen and read, because you already know there is interest in the topic or theme.

Duplicate Copy

Before discussing the steps in keyword research, it’s important to note that this process is not about creating and publishing duplicate, or even similar, copy.

Google, and other search engines like Bing and Yahoo taken a very dim view of duplicate content (which the search engine bots can discover when crawling), and will penalize you in rankings if duplicate content is discovered.

So just to be clear, effective keyword research is not about finding and re-using or re-purposing content or copy that is already out there.

Keyword Research Steps

There are a number of good tools for doing keyword research. In this blog post, rather than focus on specific tools and services, I’m going to cover a few general steps that pretty much all of the tools and services can handle.

Each tool has a different way of handling the steps, but basically the process is the same.

IDENTIFY CORE KEYWORDS

The first step is to identify your core keywords. These keywords should be more generic and less brand-specific, because you are looking for general terms that people will be searching for. Branded terms can be part of your keyword research strategy, but in the initial steps look for generic keywords that define or identify the product or service you are promoting.

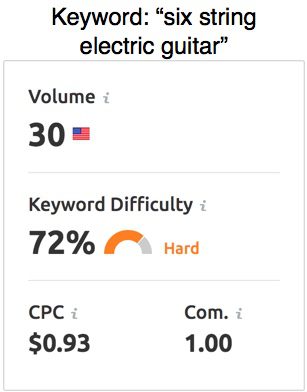

For example, if you’re an electric guitar manufacturer, core keywords you focus on could include “six string electric guitar,†or “electric guitar with a maple neck.â€

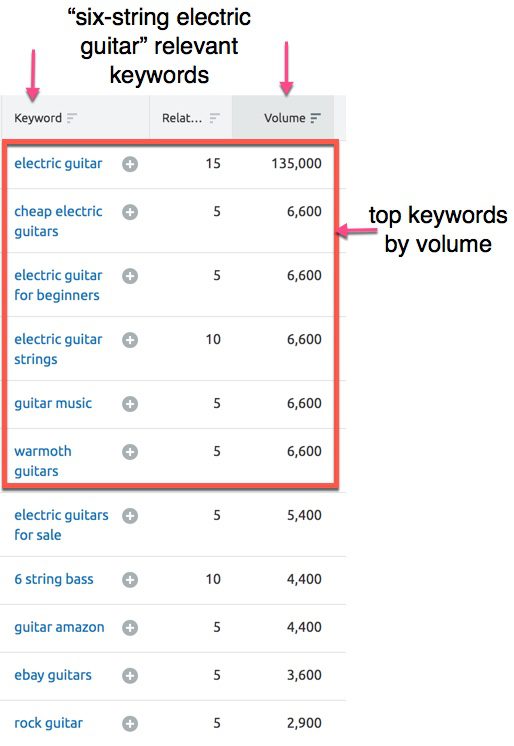

See the data below from the SEMRush keyword service.

To begin, start with a list of about 15 or 20 core keywords. As an SEO consultant, I often tell clients that it’s best to try and include keywords that you are already using on your website. This so your initial SEO efforts will go to supporting your existing content that already has some traction in search (hopefully!).

Once you have that list of 15 to 20 keywords, use a keyword research tool such as SEMRush, Moz, Google’s Keyword Planner, Ahrefs, etc. to enter those keywords one by one. The tool will then display a list of related keywords that it has picked up based on search queries and volume.

You’ll also find some some additional data for the competitive data, such as DA (Domain Authority), PA (Page Authority), and KD (Keyword Density).

For example, let’s say your core keyword “six-string electric guitar†yielded a list of 1,100 related keywords in SEMRush. Sort this list according to volume, selecting the top five relevant keywords by volume.

With these five keywords in hand, you now have two basic options for content development.

The first is to create a new page or blog post centered around each of the five keywords. When it comes to developing new content in the digital publishing era, the 80/20 rule still applies, but note that this rule has been flipped around.

Before the emergence of the impact of SEO and keyword-centric content, the basic principle for content development was to spend 20% of your time researching the topic and 80% of the time wordsmithing the copy to appeal to readers.

Today, best practices for copy on the web are essentially flipped – spend 80% of the time on keyword research (using tools like SEMRush, Moz, Ahrefs, etc) to help ensure your content gets discovered by the search engines, and 20% time crafting the copy. This is a guideline, of course, and depending on the complexity of your topic may need to be modified. But in general, it’s a good rule to follow.

Note that this keyword focus shouldn’t be just for a single page of copy on the website. Now that you’ve done your research and have a list of keywords that you know have traction in the market, consider creating what’s called a “content cluster†based around this core keyword.

Let’s continue with the related keyword “electric guitar for beginners†as an example (this is one of the keywords in the top 5 from the list above).

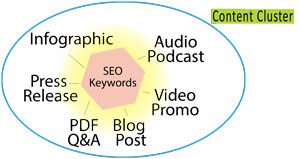

A content cluster is an integrated series of content pieces connected together by a common SEO keyword or keywords. In this case, you have the primary cluster page on your website, but you could also create a blog post offering a different perspective for more information about the topic focusing around that keyword.

The blog post could link to a YouTube video tutorial about the benefits of your guitar for beginners, with the core keyword “electric guitar for beginners†mentioned several times in the voiceover and included in subtitles for efficiency and indexing when the search engines crawl the YouTube site.

Additionally, an infographic could be created and posted on the website optimized with an alt tag description that includes that keyword. And then a podcast interview with a guitar player who purchased your model and had a great experience.

You get the point. Over time this connected series of content, using the keyword that you gleaned from your keyword research, will continue to gain traction in Google because of the integration and connectedness of the keyword and the content itself.

How to Improve Google Rankings: Step 2 — Analyze Website Behavior Flow

Website behavior — the way users are interacting with pages on your website — is often overlooked as an important step in how to improve your Google rankings. But it’s one of the most valuable ways to increase your visibility in search.

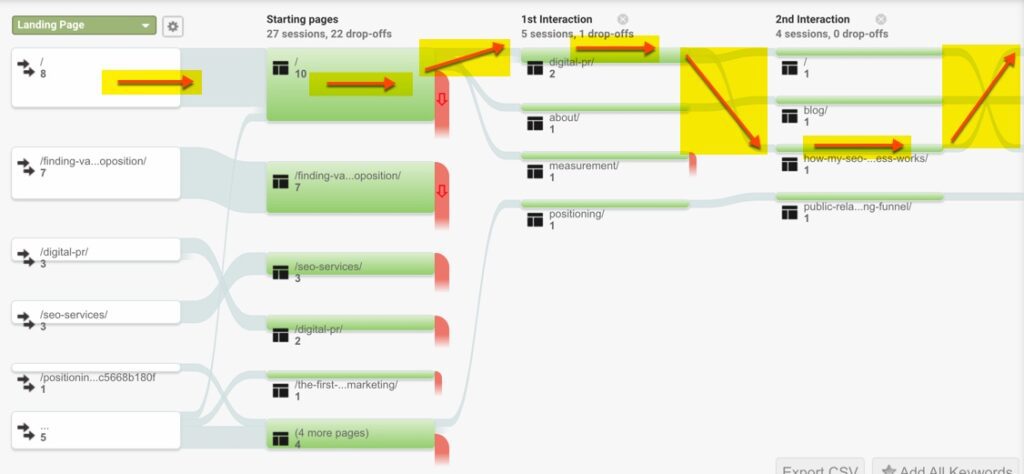

Think about it — if you had insight into the sequence of page views on your website — for example, a user’s journey from the homepage, to a product page, to a blog post, to an email signup link — wouldn’t that be valuable information?

Google Analytics has this built-in Behavior Flow analysis feature. Essentially, with behavior flow analysis, you can take a look at website page visitor patterns in terms of where users go on your site from page to page.

Having this information can tell you what pages are working, and what pages are not working based on important criteria like Time on Page, Bounce Rate, and other data in Google Analytics.

For example, let’s say you log into the behavior flow tab in Google Analytics to see traffic patterns from your homepage. In that analysis, you see that 70% of users who came to your homepage exited without going to any other pages. This is what’s known as a “Bounce.†(I discuss Bounce Rate in more detail below.)

From there, using the Behavior Flow chart, you notice that number of visitors went to one of your product pages, let’s call it the “Product A†page. Following traffic from that page in the chart, you see a number of users exited your site. And of those who stayed you see they navigated back to the homepage.

This is very likely a sign of a problem in your copy or your navigation. It seems, based on this behavior analysis that by coming back to the homepage from the second landing page (the Product A page), the user’s query was not satisfied. If it was, it’s likely you would see that user click to a related page from the Product A page.

Having this level of behavior insight regarding traffic patterns goes a long way in helping you improve your Google rankings. That’s because Google will reward pages that have high levels of engagement (clicks on links, extended time on site, etc.) when analyzing pages for rank.

Armed with the information knowing that users seem to be confused what to do when they arrive at the second stage page (Product A), you would go in and modify the copy, images, content etc. for that page and continue to monitor the behavior flow analysis.

Once you start seeing longer time on page and lower bounce rate for this page, you know you’re heading in the right direction in terms of content, and should begin to see your ranking for that particular page move up in Google.

How to Improve Google Rankings: Step 3 — Reduce Page Load Times

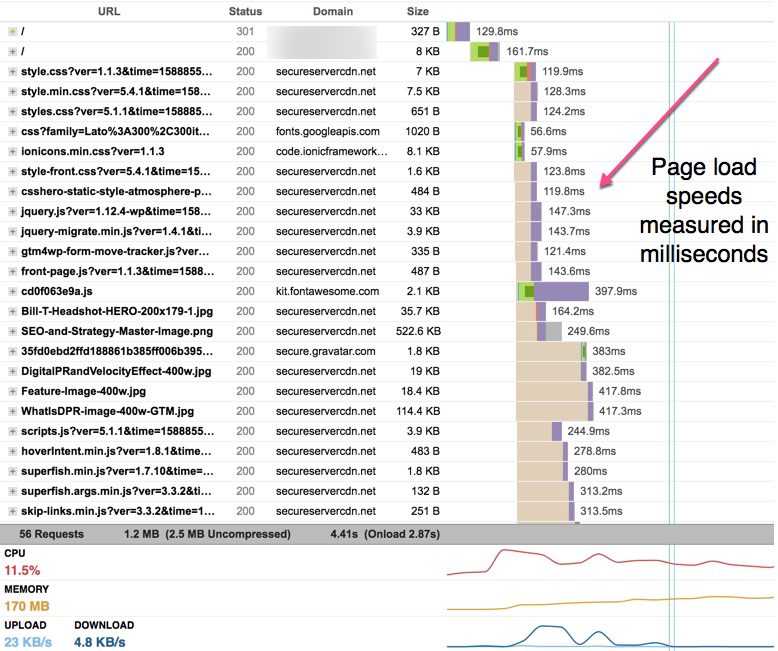

Google has placed an increasingly important priority in its search engine algorithm on page load time. This is the amount of time, measured in milliseconds, it takes all of the assets associated with your website — images, WordPress themes, plugins, etc. — to load in preparation for viewing.

The reason the slow page load time will affect your ranking in Google has to do with user experience. Google’s goal is only to deliver the optimal experience for users via its SERP (Search Engine Results Page).

If an organic search listing refers to a page that takes a while to load (and under three seconds is considered a best practice gauge for success in page speed load time), Google will see this as not delivering an optimal user experience. And so, as a result, the algorithm will penalize your site for slow load and move it down in terms of priority for ranking.

There are many reasons why a page or site might load slow, but three most common – and easiest to fix – reasons are large image file sizes, lack of proper metadata and meta-tags, and bloated plug-ins.

Large Image File Sizes

Great images can go a long way in increasing the “stickiness†factor of your site – that quality that encourages people to stay on the page longer than they might otherwise. But too often these images are uploaded to the site at sizes and/or resolutions that cause the page load time to slow down, sometimes even to a crawl, or worst case, a crash.

In my SEO consultant practice, I often talk about best practices for image file size being to make sure that images are saved in 72 dpi (Dots Per Inch) resolution as a compressed image file such as a JPEG or GIF. This resolution size and compression characteristic keeps the file size as low as possible while helping ensure the image quality is optimized for screen viewing.

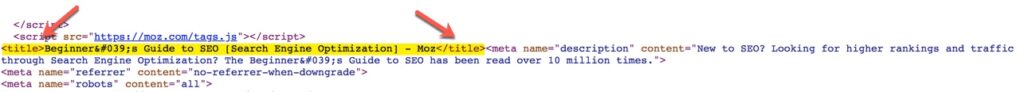

Lack of Proper Metadata and Meta-tags

Metadata and Meta-tags are the code behind webpages that give Google and other search engines direction and insight regarding what’s on the page. Remember that the search engine crawlers are essentially robots parsing code on the page in order to understand where and how to rank higher in search.

Search engine crawlers have gotten much “smarter†over the years, but they still can’t “read†copy on a page the way a human does. So the bots rely on encoded metadata. The first is the Title Tag, which gives a snapshot text versions of what the page is about. The second is the Meta-Description, which is a short paragraph block that goes into more detail about the contents of the page. (See an example of a Title Tage below).

Too often, website designers and webmasters ignore adding Title Tags and Meta-Descriptions. This basically means the search engine crawlers have to do a lot more work to understand what the page us about. And sometimes, these bots – just like humans – get “tired†and will decide to ignore scanning the page altogether if there are no metatags.

When this happens – no metatags to help the search engine crawler do its job, the page will lose ranking factor value, resulting in diminished placement in organic search.

Bloated Plug-ins

WordPress has opened up the digital publishing world for just about everybody, making it much easier to post and publish content on the digital web. Plug-ins, which are third-party “helper†applications that work with WordPress, can significantly enhance the experience of publishing content on the web.

That said, too often too many plug-ins are uploaded to a site and either not used or misused. When this happens site load time can come to a crawl because of the time it takes the bot to analyze the series of plug-ins connected to the WordPress website.

A good best practice in improved Google rankings is to use limited plug-ins, and where plug-ins must be used, to monitor the load time of the site using a tool like GTMetrix to see where plug-in activity might be slowing down load time.

Remember that page load time is really all about speed, and every millisecond counts. Addressing the three areas I just mentioned, optimizing image size, adding metadata, and reducing plug-in usage will go a long way in helping you improve your page load speed times in your overall rankings in search.

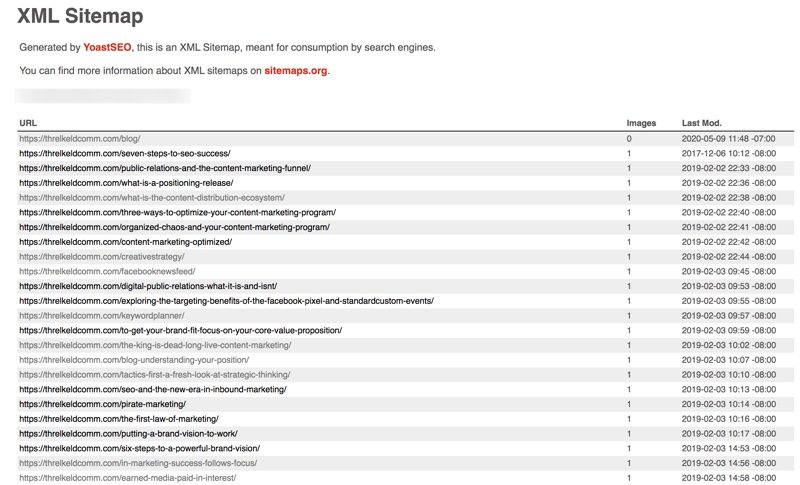

How to Improve Google Rankings: Step 4 — Update Sitemap XML File

You may not be familiar with what a Sitemap XML file is, or what it does, but it’s an essential part of improving the chances that your site will get crawled efficiently. All while increasing your chances that your pages will show up higher in search results.

The site map XML file is a directory of sorts for Google and other search engine crawlers. When Google crawls a website, it prioritizes its activity by starting at the top level to get a sense of what content is on the page and what needs to be crawled.

The first stop for the search engine bot is generally the robots.txt file, which is a small text-based document that sits at the root level of your site. Search engine crawlers will look at the robots.txt file for instructions on how to proceed with crawling the site.

A good best practice is to include a link to your sitemap.xml file at the end of your robots.txt file. Once the search engine crawler has gone through the robots.txt file for initial instructions, it looks at the sitemap.xml file to get a sense of what pages in the site should be crawled.

It is from this sitemap.xml file that Google builds its master index of pages of all of the sites on the web.

One of the reasons pages don’t rank well in search is that this sitemap.xml file is not updated regularly. For example: after a new page is created; an image is updated; copy on an existing page is modified, etc.

Too often, after adding new pages or updating existing pages, website owners forget to update the sitemap.xml file to capture this new content information in the directory.

So the new content-optimized page gets published and is live on the web, but Google and other search engines have a hard time finding it because the search engines crawl the sitemap.xml file for instructions on how to analyze the site. And if the search engine can’t find the new content on the website, it will just ignore it.

So if a page is updated, or new pages created and not added to the sitemap.xml file, there’s a good chance this page will not ever rank high in search.

The steps to fix this situation are simple. As mentioned, update the sitemap.xml file after adding new content or modifying existing content. Note that some plug-ins like the Yoast Premium plug-in make creating and updating a sitemap.xml file a breeze.

Once the sitemap.xml has been updated, copy the URL and go to Google Search Console and submit the new sitemap URL in the section provided in Search Console. Note that it can take several days, or up to a week, for Google to recognize the new sitemap.xml file, but once it does you have the assurance that your newly updated page is now being crawled and recognized by Google.

And this will greatly improve the chances that this page will begin to rank higher in search.

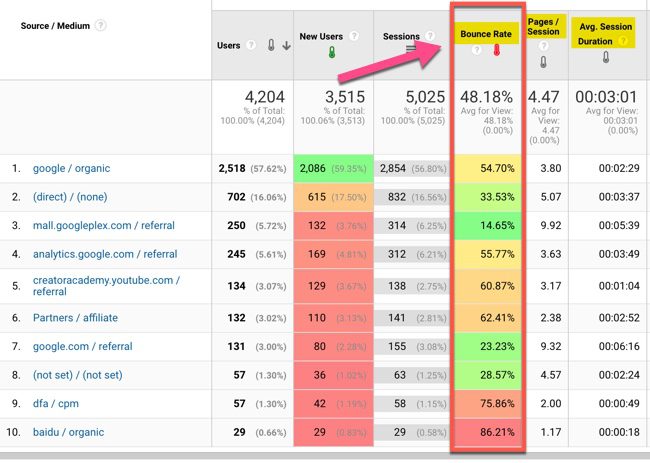

How to Improve Google Rankings: Step 5 — Fix Your Bounce Rate

Bounce Rate is defined as the rate at which users leave your website in an allotted time (by default 30 seconds) without visiting another page. Addressing a high bounce rate is a key step in how to improve your Google ranking.

While the core topic of this post is about avoiding pages that never get a single view, there’s a good chance that the remaining 10% of pages in the Ahrefs research that did get seen had a higher-than-desirable bounce rate.

This means that only a small percentage of those pages that did get views led to additional page views on the website.

I talked about Bounce Rate a little earlier in this post when discussing Behavior Flow Analysis. If you begin to see users dropping off in terms of page visits after visiting the initial landing page, this is a sign that you have work to do in terms of making your pages more engaging.

A high Bounce Rate tells Google and other search engines that your site is lacking the “stickiness factor†I mentioned earlier. The goal of your site, and in the eyes of the search engines, should be to keep visitors as engaged as possible with your content.

When you start seeing bounce rates as high as 70 or 80%, you know you have some work to do with regard to the copy on that particular page. If you find a page that has a high bounce rate, consider adding images or a video that might help keep a visitor engaged.

One tactic might be to create a short 1:30 video tutorial related to a topic on the page with a high bounce rate. The video doesn’t need to have premium production quality as long as it’s interesting and related to the content on the page. For even better SEO value, make sure the voiceover includes your keyword or keywords that you uncovered in your keyword research earlier, and include closed-caption subtitles in the video that can be picked up during the search bot crawl.

In Summary

Improving your Google rankings should be a consistent and ongoing goal. Because the web is a dynamic and competitive environment, one that your competitors are also engaged with and adding new keywords to regularly, it’s important to stay on top of how your site is performing and improving in those areas that have a direct impact on your search engine rankings.

These five steps I mentioned in this post will go a long way in helping you improve your Google rankings now. There are certainly other steps you can take, but if you’re looking to get started in improving your rankings, take these five steps into consideration and you’ll be well on your way to better results in organic search.

Bill Threlkeld is president of Threlkeld Communications, Inc., a digital PR consultancy based in Santa Monica, California. Threlkeld Communications, Inc. specializes in Digital PR strategy and tactics, with a focus on integrated content campaigns. This approach to Digital PR is known as the Content Distribution Ecosystem, a unique content approach that synchronizes and integrates PR, Social Media, Blogs, Audio, Video, Email Marketing and other content.